In this tutorial, we are going to discuss the Partitioner in Hadoop MapReduce. What is Hadoop Partitioner, what is the necessity of Partitioner in Hadoop, What is the default Partitioner in MapReduce, How many MapReduce Partitioner are used in Hadoop? We will answer all these questions in this MapReduce tutorial.

What is MapReduce Partitioner in Hadoop?

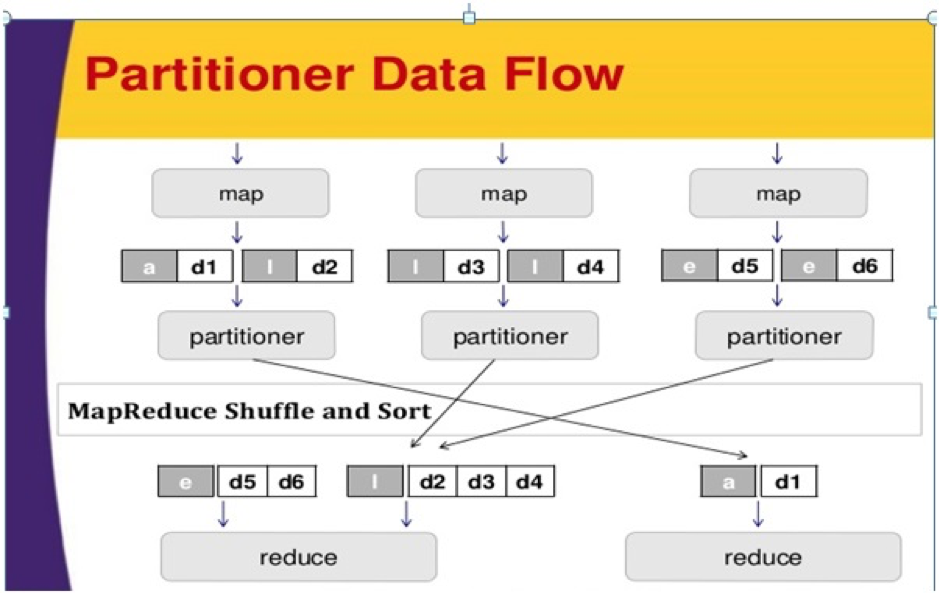

Partitioner in MapReduce job execution manages the partitioning of the keys of the intermediate map-outputs. With the support of the hash function, the key (or a subset of the key) derives the partition. The total number of partitions is similar to the number of reduce tasks.

Based on key-value, framework partitions, each mapper output. Records as having the same key value enter the same partition (within each mapper). Then every partition is sent to a reducer. Partition class chooses which partition a given (key, value) pair will go. Partition phase in MapReduce data flow occurs after the map phase and before the reduce phase.

Need of MapReduce Partitioner in Hadoop

In MapReduce job execution, it takes an input data set and generates the list of key-value pairs. These key-value pair is the outcome of the map phase. In which input data are divided and each task processes the split and each map, output the list of key-value pairs. Therefore, the framework sends the map output to reduce the task. Reduce handles the user-defined reduce function on map outputs. Before reducing the phase, partitioning of the map output takes place based on the key.

Hadoop Partitioning states that all the values for each key are grouped. It also makes sure that all the values of a single key go to the same reducer. This permits even distribution of the map output over the reducer.

Partitioner in a MapReduce job readdresses the mapper output to the reducer by determining which reducer handles the particular key.

Hadoop Default Partitioner

Hash Partitioner is the default Partitioner. It calculates a hash value for the key. It also allocates the partition based on this result.

How many Partitioners in Hadoop?

The total number of Partitioner relies on the number of reducers. Hadoop Partitioner splits the data according to the number of reducers. It is set by JobConf.setNumReduceTasks() method. Thus the single reducer handles the data from a single partitioner. The main thing to notice is that the framework generates partitioner only when there are many reducers.

Poor Partitioning in Hadoop MapReduce

If in data input in MapReduce job one key emerges more than any other key. In such a case, to send data to the partition we use two mechanisms which are as follows:

• The key emerging more number of times will be sent to one partition.

• All the other key will be sent to partitions based on their hashCode().

If the hashCode() method does not divide other key data over the partition range. Then data will not be sent to the reducers.

Poor partitioning of data means that some reducers will have more data input as related to others. They will have more jobs to do than other reducers. Thus the complete job has to wait for one reducer to finish its extra-large share of the load.

How to overcome poor partitioning in MapReduce?

To overcome poor partitioner in Hadoop MapReduce, we can generate a custom partitioner. This permits sharing workload across multiple reducers.

Conclusion

In conclusion, Partitioner permits uniform distribution of the map output over the reducer. In MapReduce Partitioner, partitioning of map output occurs based on the key and value. Therefore, we have discussed the complete overview of the Partitioner in this tutorial. I hope you liked it.