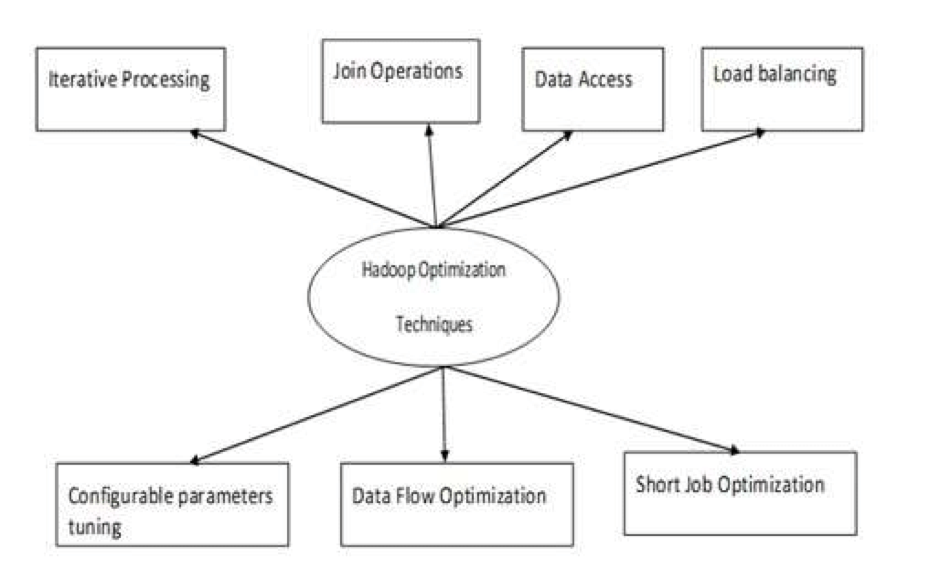

MapReduce Job Optimization Techniques: In this tutorial, we are going to discuss all those techniques for MapReduce Job optimizations. In this MapReduce tutorial, we will offer you 6 important tips for MapReduce Job Optimization such as the Proper configuration of your cluster, LZO compression usage, Proper tuning of the number of MapReduce tasks, etc.

Tips for MapReduce Job Optimization

Below are some MapReduce job optimization techniques that would assist you in optimizing MapReduce job performance.

Proper configuration of your cluster

• With -noatime option Dfs and MapReduce storage are mounted. This will disable the access time. Therefore increases I/O performance.

• Try to prevent the RAID on TaskTracker and datanode machines. This generally decreases performance.

• Guarantee that you have configured mapred.local.dir and dfs.data.dir to point to one directory on each of your disks. This is to guarantee that all of your I/O capacity is utilized.

• You should examine the graph of swap usage and network usage with software. If you see that swap is being used, you should minimize the amount of RAM allowed to each task in mapred.child.java.opts.

• Make sure that you should have smart monitoring of the health status of your disk drives. This is one of the key practices for MapReduce performance tuning.

LZO compression usage

For transitional data, this is always a good idea. Every Hadoop job that generates a non-negligible amount of map output will get advantage from transitional data compression with LZO.

Although LZO adds a little bit of overhead to CPU, it saves time by decreasing the amount of disk IO during the shuffle.

Set mapred.compress.map.output to true to enable LZO compression.

Proper tuning of the number of MapReduce tasks

• In MapReduce job, if each task takes 30-40 seconds or more, then it will decrease the number of tasks. The mapper or reducer process involves the following things: Initially, you need to start JVM (JVM loaded into the memory). Then you need to initialize JVM. And after processing (mapper/reducer) you need to de-initialize JVM. And these JVM tasks are very costly. Suppose a case in which the mapper runs a task just for 20-30 seconds. For this, we need to start/initialize/stop JVM. This might take a significant amount of time. Hence, it is strictly recommended to run the task for at least 1 minute.

• If a job has more than 1TB of input. Then you should believe in increasing the block size of the input dataset to 256M or even 512M. Hence the number of tasks will be smaller. You can alter the block size by using the command Hadoop distcp –Hdfs.block.size=$[256*1024*1024] /path/to/inputdata /path/to/inputdata-with-largeblocks

• As we know that each job runs for at least 30-40 seconds. You should improve the number of mapper tasks to some multiple of the number of mapper slots in the cluster.

• Don’t operate too many reduce tasks – for most jobs. The number of reduce tasks is similar to or a bit less than the number of reduce slots in the cluster.

Combiner between Mapper and Reducer

If an algorithm comprises computing combines of any sort, then we should utilize a Combiner. Combiner operates some aggregation before the data hits the reducer. The Hadoop MapReduce framework runs the combiner intelligently to minimize the amount of data to be written to disk. And that data has to be moved between the Map and Reduce stages of computation.

Usage of most appropriate and compact writable type for data

Big data users utilize the Text writable type not necessarily to switch from Hadoop Streaming to Java MapReduce. The text can be suitable. It’s ineffective to change numeric data to and from UTF8 strings. And can make up an appropriate portion of CPU time.

Re-usage of Writables

Many MapReduce users make one very common mistake that is to allow a new Writable object for every output from a mapper/reducer. Suppose, for example, word-count mapper implementation as below:

public void map(...) {

...

for (String word: words) {

output.collect(new Text(word), new IntWritable(1));

}

This employment causes the allocation of thousands of short-lived objects. While Java garbage collector does a reasonable job at dealing with this, it is more effective to write:

class MyMapper ... {

Text wordText = new Text();

IntWritable one = new IntWritable(1);

public void map(...) {

... for (String word: words)

{

wordText.set(word);

output.collect(word, one); }

}

}

Conclusion

Hence, various MapReduce job optimization techniques help you in optimizing MapReduce job. Like using combiner between mapper and Reducer, by LZO compression usage, proper tuning of the number of MapReduce tasks, Re-usage of writable.