In this tutorial, we are going to discuss a very interesting topic i.e. Map Only job in Hadoop MapReduce. Initially, we will take a brief introduction of the Map and Reduce phase in Hadoop MapReduce, then after we will discuss what is Map only job in Hadoop MapReduce. Finally, we will also discuss the advantages and disadvantages of Hadoop Map Only job.

What is Hadoop Map Only Job in MapReduce?

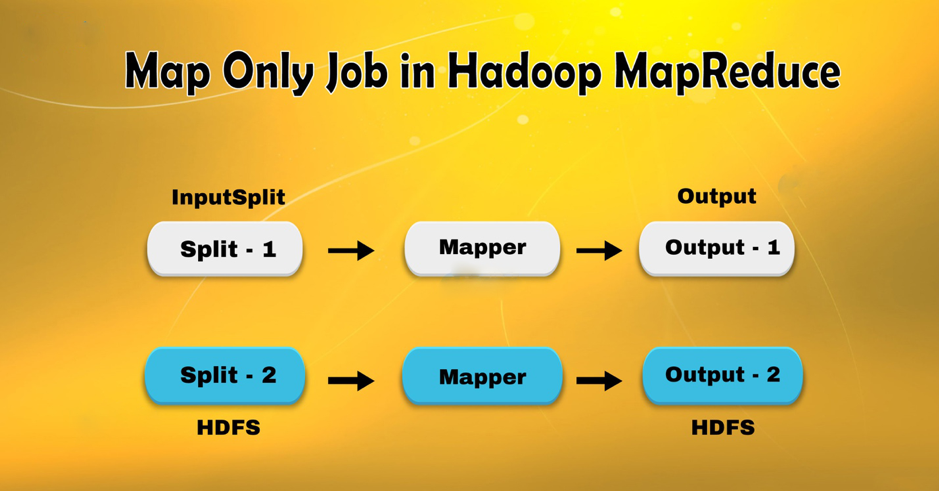

Map-Only job in the Hadoop is the process in which the mapper does all tasks. No task is done by the reducer. Mapper’s output is the final output.

MapReduce is the data processing layer of Hadoop. It processes large structured and unstructured data stored in HDFS. MapReduce also handles a huge amount of data in parallel. It does this by splitting the job (submitted job) into a set of independent tasks (sub-job). In Hadoop, MapReduce works by dividing the processing into phases: Map and Reduce.

• Map: It is the first phase of processing, where we state all the complex logic code. It takes a set of data and changes it into another set of data. It splits each element into tuples (key-value pairs).

• Reduce: It is the second phase of processing. Here we state light-weight processing like aggregation/summation. It gets the output from the map as input. Then it joins those tuples based on the key.

From this word-count example, we can say that there are two sets of parallel process, map and reduce. In the mapping process, the first input is divided to distribute the work among all the map nodes as shown above. Then the framework identifies each word and map to the number 1. Therefore, it creates pairs called tuples (key-value) pairs.

In the first mapper node, it transfers three words lion, tiger, and the river. Therefore, it produces 3 key-value pairs as the output of the node. Three different keys and values set to 1 and a similar process repeat for all nodes. Then it transfers these tuples to the reducer nodes. Partitioner carries out shuffling so that all tuples with the same key go to the same node.

In a reduce process what happens is an aggregation of values or rather an operation on values that share the same key.

Now, let us consider a scenario where we just required to operate. We don’t need aggregation, in such cases, we will prefer ‘Map-Only job’.

In Map-Only job, the map does all tasks with its InputSplit. Reducer does no job. Mapper’s output is the final output.

How to avoid Reduce Phase in MapReduce?

By setting job.setNumreduceTasks(0) in the configuration in a driver we can prevent the reduce phase. This will create many reducers as 0. Therefore the only mapper will be doing the complete task.

Advantages of Map only job in Hadoop MapReduce

In MapReduce job execution in between map and reduces phases there is key, sort, and shuffle phase. Shuffling –Sorting is accountable for sorting the keys in ascending order. Then grouping values based on the same keys. This phase is very costly. If the reduce phase is not needed, we should avoid it. Avoiding the reduce phase would eradicate the sorting and shuffle phase as well. Therefore, this will also save network congestion. The reason is that in shuffling, an output of the mapper moves to reduce. And when the data size is huge, large data needs to move to the reducer.

The output of the mapper is written to the local disk before sending it to reduce. But in map only job, this output is exactly written to HDFS. This further saves time as well as reduces costs.

Conclusion

Hence, we have seen that a Map-only job reduces network congestion by avoiding shuffle, sort, and reduce phases. The map alone takes care of overall processing and produce the output. By using a job.setNumreduceTasks(0) this is achieved. I hope you have understood the Hadoop map only job and its significance because we have covered everything about Map Only job in Hadoop.