Now we are going to study InputSplit in Hadoop MapReduce. Here, we will discuss what is Hadoop InputSplit, the need of InputSplit in MapReduce. We will also debate how these InputSplits are created in Hadoop MapReduce in detail

Introduction to InputSplit in Hadoop MapReduce

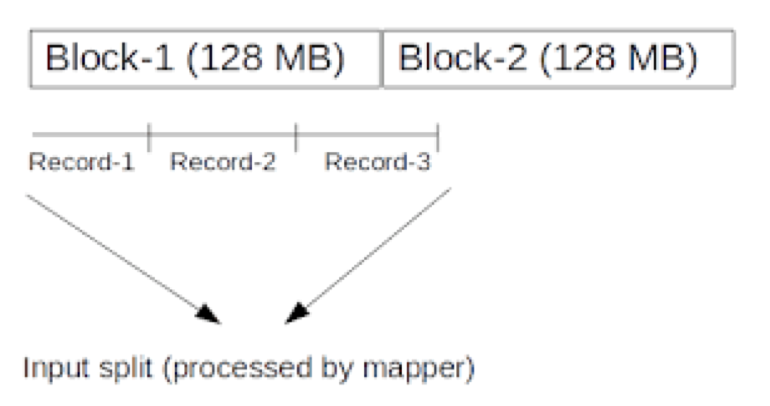

InputSplit is a logical illustration of data in Hadoop MapReduce. InputSplit represents the data that individual mapper processes. Therefore the number of map tasks is equal to the number of InputSplits. The framework divides split into records, which mapper processes.

MapReduce InputSplit length has measured in bytes. Every InputSplit has storage locations (hostname strings). The MapReduce system locates map tasks as close to the split’s data as possible by using storage locations. The framework handles Map tasks in the order of the size of the splits so that the largest one gets processed first (greedy approximation algorithm). This decreases the job run time. The main objective to focus on is that Inputsplit does not contain the input data.

How InputSplits are created in Hadoop MapReduce?

As a user, we don’t deal with InputSplit in Hadoop directly, as InputFormat (as InputFormat is responsible for creating the Inputsplit and dividing into the records) generates it. FileInputFormat splits a file into 128MB chunks. Besides, by setting mapred.min.split.size parameter in mapred-site.xml user can modify the value as per requirement. Moreover, by this, we can override the parameter in the Job object used to submit an appropriate MapReduce job. By writing a custom InputFormat we can also handle how the file is broken into splits.

InputSplit is user-defined. The user can also control split size based on the size of data in the MapReduce program. Hence, In a MapReduce job, the execution number of map tasks is equal to the number of InputSplits.

By calling ‘getSplit()’, the client calculates the splits for the job. Then it sent to the application master, which uses their storage locations to schedule map tasks that will handle them on the cluster.

After that map task permits the split to the createRecordReader() method. From that, it gets RecordReader for the split. Then RecordReader creates a record (key-value pair), which it passes to the map function.

Conclusion

In conclusion, we can say that InputSplit represents the data that individual mapper processes. For each split, one map task is created. Hence, InputFormat creates InputSplit.