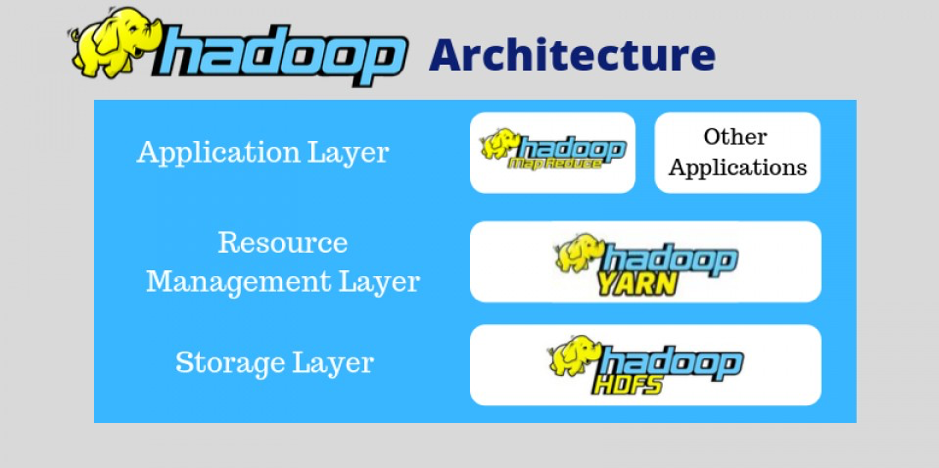

In this part of the tutorial, we will learn how Hadoop works, different components of Hadoop, daemons in Hadoop, roles of HDFS, MapReduce and Yarn in Hadoop and several steps to understand How Hadoop works.

How Hadoop Works?

Apache Hadoop does distribute processing for huge data sets across the cluster of commodity servers and works on multiple machines concurrently. To process various kinds of data, the client submits data and programs to Hadoop. HDFS stores the data while MapReduce process the data and Yarn divides the tasks.

Let us discuss in detail how Hadoop works –

i. HDFS

Hadoop Distributed File System has master-slave topology. It has got two daemons running, they are NameNode and DataNode.

NameNode

It is the daemon running of the master machine. It is the centerpiece of an HDFS file system. It stores the directory tree of all files in the file system. NameNode tracks where across the cluster the file data resides. NameNode does not store the data contained in these files.

When the client applications want to add/copy/move/delete a file, they cooperate with NameNode. It responds to the request from the client by returning a list of relevant DataNode servers where the data lives.

DataNode

DataNode daemon runs on the slave nodes. It stores data in the HadoopFileSystem. In functional file system data copies across many DataNodes.

On startup, a DataNode connects to the NameNode. DataNode keeps on looking for the request from NameNode to access data. When the NameNode provides the location of the data, client applications can talk directly to a DataNode while replicating the data, DataNode instances can talk to each other.

Replica Placement

The location of the replica decides HDFS reliability and performance. Optimization of replica placement makes HDFS apart from other distributed systems. Huge HDFS instances run on a cluster of computers spreads across many racks. The communication between nodes on different racks has to go through the switches. Typically the network bandwidth between nodes on the same rack is more than that between the machines on separate racks.

The rack awareness algorithm decides the rack id of each DataNode. Below a simple policy, the replicas get placed on unique racks. This avoids data loss in the event of rack failure. Similarly, it utilizes bandwidth from multiple racks while reading data. However, this method increases the cost of writes.

Let us assume that the replication factor is three. Suppose HDFS’s placement policy places one replica on a local rack and the other two replicas on the remote but the same rack. This placement policy cuts the inter-rack write traffic thereby improving the write performance. The possibilities of rack failure are less than that of node failure. Therefore this policy does not affect data reliability and availability. Nevertheless, it does reduce the aggregate network bandwidth used when reading data. This is for a block that gets placed in only two unique racks rather than three.

ii. MapReduce

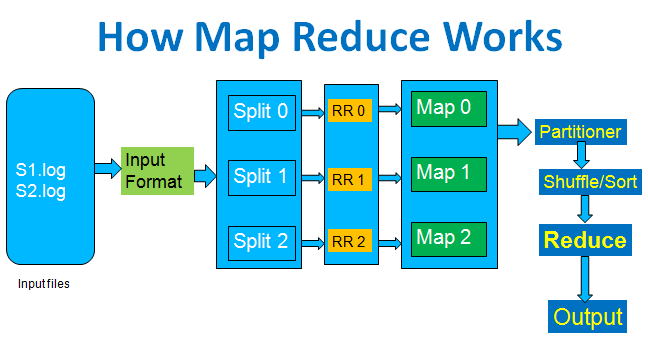

The typical idea of the MapReduce algorithm is to process the data in parallel on your distributed cluster. It then combines it into the desired result or output.

Hadoop MapReduce includes several stages:

• In the first step, the program locates and reads the « input file » containing the raw data.

• As the file format is arbitrary, there is a need to convert data into something the program can process. The InputFormat and RecordReader do this job.

• InputFormat uses InputSplit function to split the file into smaller pieces

Then the RecordReader converts the raw data for processing by the map. It outputs a list of key-value pairs.

Once the mapper process these key-value pairs the result goes to « OutputCollector ». There is another function called « Reporter » which intimates the user when the mapping task finishes.

• In the next step, the Reduce function performs its task on each key-value pair from the mapper.

• Finally, OutputFormat manages the key-value pairs from Reducer for writing it on HDFS.

• Being the heart of the Hadoop system, Map-Reduce processes the data in a highly resilient, fault-tolerant manner.

iii. Yarn

It separates the task of resource management and job scheduling/monitoring into separate daemons. There is one ResourceManager and per-application ApplicationMaster. An application can be either a job or a Directed Acyclic Graph of jobs.

The resource manager has two components – Scheduler and AppicationManager.

The scheduler is a pure scheduler i.e. it does not track the status of running application. It only allocates resources to various competing applications. Also, it does not restart the job after failure due to hardware or application failure. The scheduler allocates the resources based on an abstract notion of a container. The container is nothing but a fraction of resources like CPU, memory, disk, network, etc.

Following are the tasks of ApplicationManager:

• Accepts submission of jobs by the client.

• Negotaites first container for specific ApplicationMaster.

• Restarts the container after application failure.

Below are the responsibilities of ApplicationMaster:

• Negotiates containers from Scheduler

• Tracking container status and monitoring its progress.

It supports the concept of Resource Reservation via the reservation system. In this, a user can fix several resources for the execution of a particular job over time and temporal constraints. The reservation system makes sure that the resources are available to the job until its completion. It also performs admission control for reservations.

YARN can balance beyond a few thousand nodes via Yarn Federation. YARN Federation permits to wire multiple sub-cluster into the single massive cluster. We can use several independent clusters together for a single large job. It can be used to achieve a large scale system.

Let us synopsize how Hadoop works step by step:

• Input data is divided into blocks of size 128 Mb and then blocks are moved to various nodes.

• Once all the blocks of the data are stored on data-nodes, the user can process the data.

• Resource Manager then schedules the program on single nodes.

• Once all the nodes process the data, the output is written back to HDFS.

Hence, this was all on How Hadoop Works Tutorial.

Conclusion

In wrapping up to How Hadoop Works, we can say, the client first submits the data and program. HDFS stores that data and MapReduce processes that data. Therefore now when we have learned Hadoop’s introduction and How Hadoop works, let us now discuss how to install Hadoop on a single node and multi-node to move ahead in the technology.