At present, we will discuss a trending question Hadoop Vs MongoDB: Which is a better tool for Big Data? Currently, all the industries, such as retail, healthcare, telecom, social media are generating a huge amount of data. By 2020, the data available will reach 44 zettabytes.

We can utilize MongoDB and Hadoop to store, process, and manage Big data. Although they both have many similarities but have a different approach to process and store data is quite different.

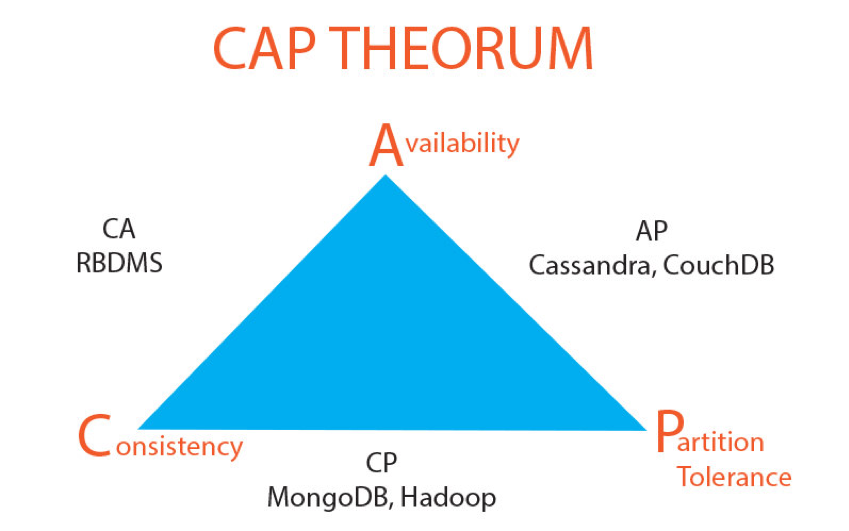

1. CAP Theorem

CAP Theorem states that distributed computing cannot achieve simultaneous Consistency, Availability, and Partition Tolerance while processing data. This theory can be related to Big Data, as it helps visualize bottlenecks that any solution will reach; only two goals can be achieved by the system. So, when the CAP Theorem’s “pick two” methodology is being taken into consideration, the choice is really about picking the two options that the platform will be more capable of handling.

Conventional RDBMS provides consistency and availability but falls short on partition tolerance. Big Data offers either partition tolerance and consistency or availability and partition tolerance.

2. Hadoop vs MongoDB

Let us begin the comparison between Hadoop and MongoDB for Big Data:

a. What is MongoDB?

MongoDB was developed by 10 gen company in 2007 as a cloud-based app engine, which was projected to run different software and services. They have created Babble(the app engine) and MongoDB(the database). The idea didn’t work properly so they released MongoDB as an open-source. We can count MongoDB as a Big data solution, it’s worth noting that it’s a general-purpose platform, design to replace or enhance existing RDBMS systems, giving it a healthy variety of use cases.

Working of MongoDB

As MongoDB is a document-oriented database management system it stores data in collections. Here several data fields can be queried once, whereas multiple queries required by RDBMS’ that allocate data across multiple tables in columns and rows. We can install MongoDB on either Windows or Linux. But as we count MongoDB for real-time low latency projects Linux is an ideal choice for that point.

Benefits of MongoDB for Big Data

MongoDB’s greatest strong point is its robustness, capable of far more flexibility than Hadoop, including potential replacement of existing RDBMS. Similarly, MongoDB is inherently better at handling real-time data analytics. Due to instantly available data also it is capable of client-side data delivery, which is not as common with Hadoop configurations. One more strong point of MongoDB is its geospatial indexing abilities, making an ideal use case for real-time geospatial analysis.

Limitations of MongoDB for Big Data

When we are examining Hadoop vs MongoDb, the drawbacks of Mongo is must: MongoDB is subject to most criticism because it tries so many various things, though it seems to have just as much approval. A key issue with MongoDB is fault tolerance, which can cause data loss. Lock constraints, poor integration with RDBMS, and many more are the added complaints against MongoDB. MongoDB also can only ingest data in CSV or JSON formats, which may require added data transformation.

Till now, we only discuss MongoDB for Hadoop vs MongoDB. Now, its time to disclose the Hadoop.

b. What is Hadoop?

Hadoop was an open-source project from starting only. It was originally stemmed from a project called Nutch, an open-source web crawler created in 2002. After that in 2003, Google released a white paper on its Distributed File System(DFS), and Nutch referred the same and developed its NDFS. After that in 2004 Google introduced the concept of MapReduce which was adopted by Nutch in 2005. Hadoop development was officially started in 2006. Hadoop became a platform for handling huge amounts of data in parallel across clusters of commodity hardware. It has become synonymous with Big Data, as it is the most familiar Big Data tool.

Working of Apache Hadoop

Hadoop has two primary components: the Hadoop Distributed File System and MapReduce. Secondary components include Pig, Hive, HBase, Oozie, Sqoop, and Flume. Hadoop’s HBase database achieves horizontal scalability through database sharing just like MongoDB. Hadoop runs on clusters of commodity hardware. HDFS divides the file into smaller portions and stores them distributedly over the cluster. MapReduce handles the data which is stored distributedly over the cluster. MapReduce utilizes the power of distributed computing, where multiple nodes work in parallel to complete the task.

Strength Related to Big Data Use Cases

Alternatively, Hadoop is more suitable at batch processing and long-running ETL jobs and analysis. The biggest strong point of Hadoop is that it was built for Big Data, whereas MongoDB became an option over time. However Hadoop may not process real-time data as well as MongoDB, ad-hoc SQL-like queries can be run with Hive, which is hyped as being effective as a query language than JSON/BSON. Hadoop’s MapReduce implementation is also much more efficient than MongoDB’s, and it is an ideal choice for evaluating huge amounts of data. Finally, Hadoop accepts data in any format, which eliminates data transformation involved with data processing.

Weakness Related to Big Data Use Cases

Hadoop is developed mainly for batch processing, it can’t process the data in real-time. Furthermore, there are many requirements like interactive processing, graph processing, iterative processing, which Hadoop can’t handle efficiently.

3. Difference Between Hadoop and MongoDB

This is in brief about Hadoop Vs MongoDB:

i. Language

Hadoop is written in Java Programming.

On the other hand, C++ used in MongoDB.

ii. Open Source

Hadoop is open-source.

MongoDB is open-source.

iii. Scalability

Hadoop is scalable.

MongoDB is scalable.

iv. NoSQL

Hadoop does not support NoSQL, although HBase on the top of Hadoop can support NoSQL

MongoDB supports NoSQL.

v. Data Structure

Hadoop has a flexible data structure.

MongoDB supports the document-based data structure

vi. Cost

Hadoop is expensive than MongoDB as it is a collection of software.

MongoDB is less expensive as it is a single product.

vii. Application

Hadoop is having large scale processing.

While MongoDB has real-time extraction and processing.

viii. Low latency

Hadoop concentrates more on high throughput rather than low-latency

MongoDB can process the data at very low-latency, it supports real-time data mining

ix. Frameworks

Hadoop is a Big Data framework, which can process a wide variety of Big Data requirements.

MongoDB is a NoSQL DB, which can process CSV/JSON.

x. Data Volumes

Hadoop can handle huge volumes of data, in the range of 1000s of PBs.

MongoDB can process the moderate size of data, in the range of 100s of TBs.

xi. Data Format

Hadoop can process any format of data structured, semi-structured, or unstructured.

MongoDB can handle only CSV and JSON data.

xii. Geospatial Indexing

Hadoop can’t handle geospatial data efficiently.

MongoDB can evaluate geospatial data with its capability of geospatial indexing.

6. Summary of Hadoop Vs MongoDB

Therefore, we have seen the entire Hadoop vs MongoDB with advantages and disadvantages to prove the best tool for Big Data. A key difference between MongoDB and Hadoop is that MongoDB is a database, while Hadoop is a collection of various software components that create a data processing framework. Both of them are having some advantages which make them unique but at the same time, both have some drawbacks.

Therefore, this was all about the difference between Hadoop and MongoDB. I hope you like it.