Even though Hadoop is the most powerful tool of big data, there are various limitations of Hadoop like Hadoop is not suited for small files, it cannot handle firmly the live data, slow processing speed, not effective for iterative processing, not effective for caching, etc.

In this tutorial on the drawbacks of Hadoop, firstly we will learn about what is Hadoop and what are the pros and cons of Hadoop. We will have a look at the features of Hadoop due to which it is so familiar. We will also see Big Disadvantages of Hadoop due to which Apache Spark and Apache Flink came into existence and learn about different ways to overcome the drawbacks of Hadoop.

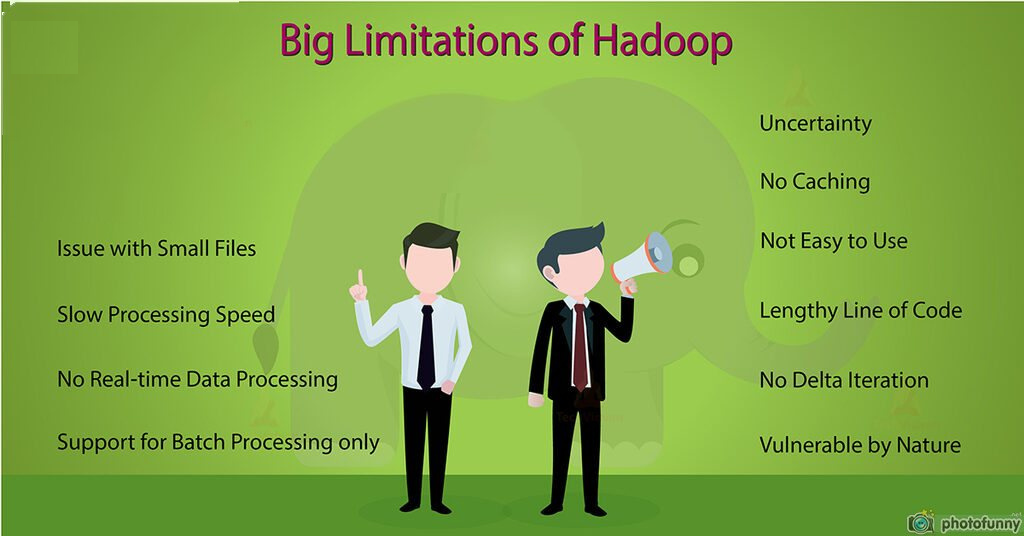

Big Limitations of Hadoop for Big Data Analytics

We will discuss various limitations of Hadoop in this section along with their solution:

1. The issue with Small Files

Hadoop does not suit for small data. (HDFS) Hadoop’s distributed file system cannot efficiently support the unplanned reading of small files because of its high capacity design.

Small files are the major problem in HDFS. A small file is considerably smaller than the HDFS block size (default 128MB). If we are storing these huge numbers of small files, HDFS can’t handle this much of files, as HDFS is for working properly with a small number of large files for storing huge data sets rather than a large number of small files. If there are too many small files, then the NameNode will get overload since it stores the namespace of HDFS.

Solution-

• Solution to this limitation of Hadoop to deal with small file issue is simple. Just merge the small files to create larger files and then copy larger files to HDFS.

• The introduction of HAR files (Hadoop Archives) was for reducing the problem of lots of files putting pressure on the namenode’s memory. By creating a layered filesystem on the top of HDFS, HAR files works. Using the Hadoop archive command, HAR files are created, which runs a MapReduce job to pack the files being archived into a small number of HDFS files. Reading through files in a HAR is not more effective than reading through files in HDFS. Since each HAR file access needs two index files to read as well as the data file to read, this makes it slower.

• Sequence files work very fine in practice to overcome the ‘small file problem’, in which we use the filename as the key and the file contents as the value. By writing a program for files (100 KB), we can put them into a single Sequence file, and then we can process them in a streaming fashion operating on the Sequence file. MapReduce can split the Sequence file into chunks and operate on each chunk independently because the Sequence file is splittable.

• Storing files in HBase is a very usual design pattern to overcome small file problem with HDFS. We are not storing millions of small files into HBase, rather adding the binary content of the file to a cell.

2. Slow Processing Speed

In Hadoop, with a parallel and distributed algorithm, the MapReduce process large data sets. There are tasks that we need to perform: Map and Reduce and, MapReduce requires a lot of time to perform these tasks thereby increasing latency. Data is distributed and processed over the cluster in MapReduce which improves the time and reduces processing speed.

Solution-

As a result of this Limitation of Hadoop spark has overcome this issue, by in-memory processing of data. In-memory processing is quicker as no time is spent in moving the data/processes in and out of the disk. Spark is 100 times faster than MapReduce as it processes everything in memory. We also Flink, as it processes faster than spark because of its streaming architecture and Flink gets instructions to process only the parts of the data that have changed, thus significantly increases the performance of the job.

3. Support for Batch Processing only

Hadoop assists batch processing only, it does not handle streamed data, and hence overall performance is slower. The MapReduce framework of Hadoop does not influence the memory of the Hadoop cluster to the maximum.

Solution-

To solve these drawbacks of Hadoop spark is used that increases the performance, but Spark stream processing is not as effective as Flink as it uses micro-batch processing. Flink increases the overall performance as it offers single run-time for the streaming as well as batch processing. Flink uses local closed-loop iteration operators which make machine learning and graph processing faster.

4. No Real-time Data Processing

Apache Hadoop is for batch processing, which means it takes a huge amount of data in input, processes it, and produces the result. Even though batch processing is very effective for processing a large volume of data, depending on the size of the data that processes and the computational power of the system, an output can delay drastically. Hadoop is not suitable for Real-time data processing.

Solution-

• Apache Spark supports stream processing. Stream processing involves continuous input and output of data. It emphasizes on the velocity of the data, and data processes within a small period. Learn more about Spark Streaming APIs.

• Apache Flink offers single run-time for the streaming as well as batch processing, so one collective run-time is used for data streaming applications and batch processing applications. Flink is a stream processing system that is capable to process row after row in real-time.

5. No Delta Iteration

Hadoop is not so effective for iterative processing, as Hadoop does not support cyclic data flow(i.e. a chain of stages in which each output of the previous stage is the input to the next stage).

Solution-

We can use Apache Spark to overcome this type of drawbacks of Hadoop, as it accesses data from RAM instead of disk, which dramatically increases the performance of iterative algorithms that access the same dataset repeatedly. Spark iterates its data in batches. For iterative processing in Spark, we plan and execute each iteration separately.

6. Latency

In Hadoop, MapReduce framework is comparatively slower, since it is for supporting different formats, structures, and the large volume of data. In MapReduce, Map takes a set of data and changes it into another set of data, where individual elements are broken down into key-value pairs, and Reduce takes the output from the map as input and handles further and MapReduce requires a lot of time to perform these tasks thereby increasing latency.

Solution-

Spark is used to reduce this drawback of Hadoop, Apache Spark is yet another batch system but it is relatively faster since it caches much of the input data on memory by RDD(Resilient Distributed Dataset) and keeps transitional data in memory itself. Flink’s data streaming achieves low latency and high throughput.

7. Not Easy to Use

In Hadoop, MapReduce developers need to share code for every operation which makes it very problematic to work. MapReduce has no communicating mode, but adding one such as hive and pig makes working with MapReduce a little simple for adopters.

Solution-

To solve this limitation of Hadoop, we can use the spark. Apache Spark has communication mode so that developers and users alike can have transitional feedback for queries and other activities. Spark is simple to program as it has tons of high-level operators. We can easily use Flink as it also has high-level operators. This way spark can solve many drawbacks of Hadoop.

8. Security

Hadoop is challenging in managing the complex application. If the user doesn’t know how to enable a platform who is managing the platform, your data can be a huge risk. At storage and network levels, Hadoop is missing encryption, which is a crucial point of concern. Hadoop supports Kerberos authentication, which is tough to manage.

HDFS supports access control lists (ACLs) and a conventional file permissions model. Though, third-party vendors have allowed an organization to leverage Active Directory Kerberos and LDAP for authentication.

Solution-

Spark offers a security bonus to overcome these drawbacks of Hadoop. If we run the spark in HDFS, it can use HDFS ACLs and file-level permissions. Moreover, Spark can run on YARN giving it the ability to use Kerberos authentication.

9. No Abstraction

Hadoop does not have any type of abstraction so MapReduce developers need to share code for every operation which makes it very tough to work.

Solution-

To overcome these limitations of Hadoop, Apache Spark is used in which we have RDD abstraction for the batch. Flink has Dataset abstraction.

10. Vulnerable by Nature

Hadoop is completely written in Java, a language most extensively used, hence java been most heavily utilized by cybercriminals and as a result, implicated in various security breaches.

11. No Caching

Hadoop is not effective for caching. In Hadoop, MapReduce cannot cache the transitional data in memory for a further requirement which diminishes the performance of Hadoop.

Solution-

Spark and Flink can overcome this limitation of Hadoop, like Spark and Flink cache data in memory for further iterations which improves the overall performance.

12. Lengthy Line of Code

Hadoop has a 1,20,000 line of code, the number of lines produces the number of bugs and it will take more time to execute the program.

Solution-

Although Spark and Flink are written in scala and java the implementation is in Scala, so the number of lines of code is lesser than Hadoop. So it will also take less time to execute the program and solve the lengthy line of code limitations of Hadoop.

13. Uncertainty

Hadoop only guarantees that the data job is complete, but it’s unable to guarantee when the job will be complete.

Drawbacks of Hadoop and Its solutions – Summary

As a result of the drawbacks of Hadoop, the need for Spark and Flink occurred. Therefore made the system more friendly to play with a large amount of data. Apache Spark offers in-memory processing of data thus increases the processing speed. Flink improves the overall performance as it offers single run-time for the streaming as well as batch processing. Spark offers a security bonus.

Now that the drawbacks of Hadoop have been exposed, will you continue to use it for your big data initiatives, or swap it for something else?