In this article, we will discuss what is Reducer in MapReduce?, how Reducer works in Hadoop MapReduce, various phases of Hadoop Reducer, how can we transform the number of Reducer in Hadoop MapReduce?

What is Hadoop Reducer?

Reducer in Hadoop MapReduce decreases a set of intermediate values that share a key to a smaller set of values.

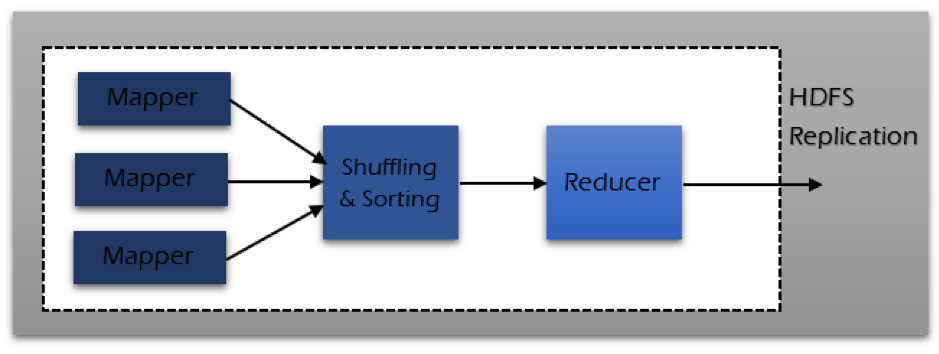

In MapReduce job execution flow, Reducer takes a set of an intermediate key-value pair generated by the mapper as the input. Therefore, Reducer aggregate, filter, and combine key-value pairs and this needs a wide range of processing.

The one-one mapping occurs between keys and reducers in MapReduce job execution. They run at the same time since they are independent of one another. The user determines the number of reducers in MapReduce.

Phases of Hadoop Reducer

Three phases of Reducer are as follows:

Shuffle Phase

This is the phase in which organised output from the mapper is the input to the reducer. The framework with the help of HTTP fetches the appropriate partition of the output of all the mappers in this phase.

Sort Phase

In Sort Phase, the input from various mappers is again sorted based on the similar keys in different Mappers.

Both Shuffle and Sort occur concurrently.

Reduce Phase

This phase occurs after shuffle and sort. Reduce task aggregates the key-value pairs. With the OutputCollector.collect() property, the output of the reduce task is written to the FileSystem. Reducer output is not organised.

Number of Reducers in Hadoop MapReduce

The user organised the number of reducers with the help of Job.setNumreduceTasks(int) property. Therefore the right number of reducers by the formula:

0.95 or 1.75 multiplied by (<no. of nodes> * <no. of the maximum container per node>)

So, with 0.95, all reducers immediately launch. Then, begin transferring map outputs as the maps finish.

Faster node concludes the first round of reducers with 1.75. Then it launches the second wave of reducer which does a much-improved job of load balancing.

With the increase in the number of reducers:

• Framework overhead increases.

• Load balancing increases.

• Cost of failures decreases.

Conclusion

Hence, Reducer takes mappers output as input. Therefore, process the key-value pairs and produces the output. Reducer output is the final output.