Big data is a new buzz word in the IT field. Big Data is making its place in almost every domain. Hadoop is one of the important big data technology. So, the rise in demand for Big Data technology leads to a rise in professionals having Hadoop skills. At present people whether fresher or experienced want to grab the opportunity which big data brings. You must be assuming about how you can build your career in Hadoop. In this tutorial, we are going to discuss a career in Hadoop. We will look at various Hadoop job profiles with their roles and salaries. At last, we will discuss Hadoop applications in different sectors.

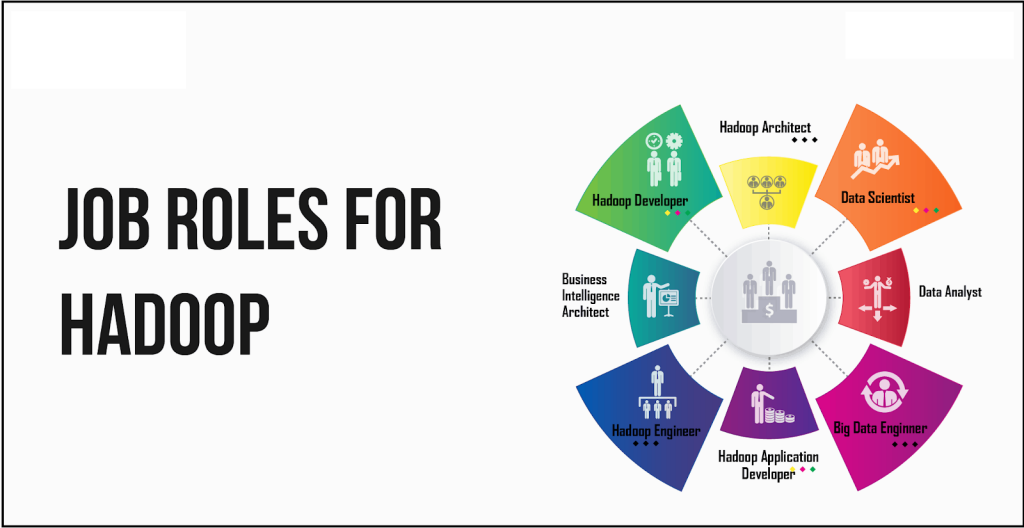

Hadoop Job Titles and Roles

A hidden secret is there is no prerequisite as such to make a career in Hadoop. You have to work hard and show dedication. Some people are freshers, experienced from the IT industry, and non-IT industries making a career in Hadoop. There can be a lot of effort between the early stages of the job hunt and offer letter. First, choose among the different job roles Hadoop has to offer so that you can attempt in the right direction. Let us see what are the different Hadoop job roles:-

1. Big Data Analyst

Big Data Analyst is accountable for using big data analytics and analyzing the company’s technical performance and giving recommendations on system enhancement. Their focus is on issues like streaming live data and data migrations. They team up with persons like data scientists and data architects. They do so to guarantee the streamlined implementation of services, profiling source information and setting characteristics. Big Data analysts implement big data processes like parsing, text annotation, filtering enrichment.

2. Big Data Architect

They are responsible for the full life cycle of a Hadoop solution. It embraces creating requirement analysis, platform selection, the design of technical architecture. It also encompasses application design and development, testing, the design of the proposed solution. They should know the pros and cons of various technology and platforms. They document use cases, solutions, and recommendations. Big Data need to work creatively and analytically to solve a problem.

3. Data Engineer

Data Engineers are responsible for Hadoop development, scope, and deliver different big data solutions. Their contribution is in designing solutions on high-level architecture. Data Engineers manage technical communication between vendors and internal systems. Data engineers maintain production systems in Kafka, Cassandra, Elasticsearch, and so on. Data Engineer builds a cloud-based platform that permits the easy development of new applications.

4. Data Scientist

Data Scientists utilize their analytical, statistical, and programming skills to collect and interpret data. Data scientists then use this data to develop data-driven solutions for tough business challenges. Data Scientist works with stakeholders throughout the organization. They do so to look at how they can control company data to drive business solutions. They mine and evaluate data from company databases. This is to improvise product development, market techniques, and business strategies.

5. Hadoop Developer

They handle the installation and configuration of Hadoop. Hadoop Developer writes Map-Reduce code for Hadoop clusters. Hadoop Developers convert complex technical and functional requirements into detailed design. Hadoop developer performs testing of software models and transfers to the operational team. Hadoop Developers maintain data security and privacy. Hadoop Developers perform analysis of large datasets and derive insights.

6. Hadoop Tester

Hadoop tester’s job is to troubleshoot and fix bugs in Hadoop applications. He makes sure Map-Reduce job, Pig Latin scripts, and HiveQl scripts work as expected. Hadoop tester develops test cases in Hadoop/Hive/Pig to identify all bugs. He reports defects to the development team and manager and drives them to closure. Hadoop tester creates a defect report by consolidating all defects.

7. Hadoop Administrator

Hadoop admin is accountable for setting up a Hadoop cluster, Backup, recovery, and maintenance. Hadoop Admin keeps track of Hadoop cluster connectivity and security. They also set up a new user. They do capacity planning and screening of Hadoop cluster job performances. Hadoop Admin maintains and supports the Hadoop cluster.

8. Hadoop Architect

Hadoop architect plans and designs big data Hadoop architecture. He creates requirement analysis and chooses the platform. He designs technical architecture and application design. His responsibilities include deploying the proposed Hadoop solution.

Salaries for Hadoop Professionals

The average annual pay of Hadoop developer in the United States is $121,243 a year as of March 2019. The annual salaries are as low as $45,500 and as high as $172,000. Most of the Hadoop developer’s salaries range between $103,000 for 25 percent of the Hadoop developers. And $135,000 for 75 percent of the Hadoop developers across the USA.

Thee Hadoop developer jobs in Mumbai and the surrounding area is very active. People working as a Hadoop developer in this area are making an average of $121,234 annually, which is the same as the national average for the US.

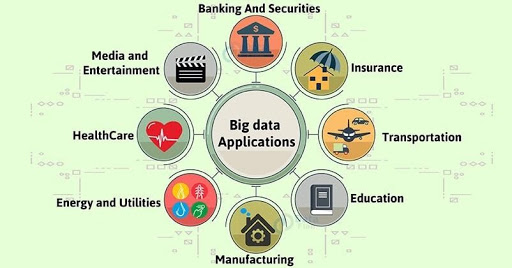

Hadoop Applications in Various Sectors

Let’s discuss Hadoop Applications in various sectors in detail –

1. Financial sector

Both the volume and value of data collected in the financial sector is increasing day by day. The financial sector utilizes Hadoop because of its power of evaluation and data processing. Banks, insurance companies and security firms rely on Hadoop to store and process large amounts of data. There are different facets of the financial sector that uses Hadoop.

One of the most important areas is fraud detection. Financial crimes and data breaches are some of the problems faced by the industry. Big Data analytics is used to detect and prevent internal and external frauds. That too with cost-effective on infrastructure. Evaluating point of sale, authorization of transactions helps banks identify and mitigate fraud.

2. Communication Media & Entertainment

With tons of digital consumers, the media entertainment industry is ready to leverage data for profitable customer engagements. There is a lot of potential in data collected by the media and entertainment industry. They can mine this data to understand what content, movies, shows, music consumer like. They can infer the Audience’s interest from various data sources like reviews, clickstreams, log files, history, searches, and so on.

Intel circulated a case study about an entirely new Big Data analytics engine that Caesars Entertainment built. They have utilized Cloudera Hadoop and a cluster of Xeon E5 servers. The purpose of this analytics engine was to support new marketing campaigns. The aim of the campaign was customers with interests beyond traditional gaming, including entertainment, dining, and online social gaming. The outcomings of this project have been spectacular. It increased Caesars’ return on marketing programs and dramatically decreasing the time to respond to prominent customer events.

3. Healthcare Providers

Healthcare expenses in the USA now represent 17.6 percent of GDP. Which is nearly $600 billion more than the expected benchmark. McKinsey approximates the impact of applying big data could account for $300 billion to $450 billion in reduced healthcare spending.

A leading healthcare organization that serves more than a hundred million patients collects petabytes of claims and treatment data. It plans to create a new data repository service for its customers to run an expanded set of analytics. MapR is the only Hadoop platform that delivers a volume-based isolated environment with quotas and secure access for the end-users.

Surgeons in the University of Iowa Hospitals and clinics wanted to know about infections patients are vulnerable to. This was to make a treatment-related decision in the operating room. The solution consisted of merging patient’s historical data with the live patient’s vital signs to predict the patient’s vulnerability to infections. It provided doctors with real-time predictive decision-making assistance during the surgical treatment.

4. Education

The big data challenges in the education sector include:-

• Data from varied sources.

• Untrained staff and institutions about big data.

• Issues of privacy and data protection.

• One of the challenges faced in the real world is that companies struggle to fill the requirements of talents in their fields. This is because the current education system does not have a curriculum that suits the industry requirement. At many places the syllabus followed is not updated. Using Hadoop the education institutes evaluate the jobs which companies post. And get to know recent trends in the industry to design their syllabus accordingly.

5. Manufacturing and Natural Resources

The various big data challenges are:-

• Upsurge in volume, velocity, and complexity of data due to the rise in natural resources.

• Large volumes of untapped data from the manufacturing industry.

• Underusage of data prevents improved quality, energy efficiency, reliability, and better profit margins.

• Big Data helps manufacturers in supply-chain management. It provides them the ability to track the location of their products. The system tracks the coordinates of the parcel using bar code scanners and radio frequency emission devices. These devices transmit the location of the product giving the exact location of the parcel.

Summary

If you are studying this Hadoop job tutorial then perhaps you have some interest in Hadoop or trying to research in this field. Obtaining a job as a Hadoop professional has good future career prospects. This is a fact provided when you have an interest in this field. And are prepared to update yourself with new big data technologies. Doing good in any domain requires dedication and hard work and so is true for Hadoop.