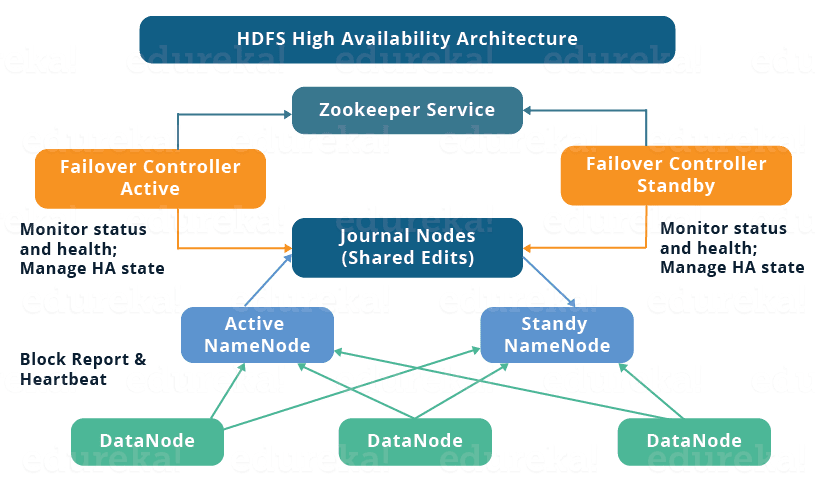

Earlier Hadoop 2.0 that is Hadoop 1.0 faced a single point of failure (SPOF) in NameNode. This means if the NameNode failed the entire system would not function and manual intervention was necessary to bring the Hadoop cluster up with the help of secondary NameNode which resulted in overall downtime. Through Hadoop 2.0 we had a single standby node to facilitate automatic failover and with Hadoop 3.0 which supports multiple standby nodes, the system has become even more highly available. In this tutorial, we will talk about Hadoop’s high availability. We will look at various types of failover and discuss in detail how the components of Zookeeper provide for automatic failover.

1. What is Hadoop High Availability?

Through Hadoop 2.0, we have backing for multiple NameNodes and with Hadoop 3.0 we have standby nodes. This overpowers the SPOF (Single Point Of Failure) issue using an extra NameNode (Passive Standby NameNode) for automatic failover. This is the high availability in Hadoop.

i. What is Failover?

Failover is a process in which the system transfers control to a secondary system in an event of failure.

There are two types of failover:

• Graceful Failover – In this type of failover the administrator manually initiates it. We use this failover in case of routine system maintenance. There is a need to manually transfer the control to standby NameNode it does not happen automatically.

• Automatic Failover – In this Failover, the system automatically transfers the control to standby NameNode without manual intervention. Without this failover, if the NameNode goes down then the entire system goes down. Therefore the feature of Hadoop high availability is available only with this automatic failover, it functions as your insurance policy against a single point of failure.

2. NameNode High Availability in Hadoop

Automatic failover in Hadoop adds up below sections to a Hadoop HDFS deployment:

• ZooKeeper quorum.

• ZKFailoverController Process (ZKFC).

i. Zookeeper Quorum

Zookeeper quorum is a centralized service for maintaining small amounts of data for coordination, configuration, and name. It provides group services and synchronization. It keeps the client informed about changes in data and track client failures. Execution of automatic HDFS failover relies on Zookeeper for:

• Failure detection- Zookeeper maintains a session with NameNode. In the event of failure, this session expires and the zookeeper informs the other NameNodes to start the failover process.

• Active NameNode election- Zookeeper offers a method to select a node as an active node. Hence whenever his active NameNode fails, other NameNode takes on exclusive lock in the Zookeeper, stating that it wants to become the next active NameNode.

ii. ZKFailoverController (ZKFC)

ZKFC is a client of Zookeeper that observes and manages the namenode status. So, every machine which runs namenode service also runs a ZKFC.

ZKFC handles:

Health Monitoring – ZKFC regularly pings the active NameNode with Health check command and if the NameNode doesn’t respond it in time it will mark it as unhealthy. This may occur because the NameNode might be crashed or frozen.

Zookeeper Session Management – If the local NameNode is healthy it keeps a session open in the Zookeeper. If this native NameNode is active, it holds a special lock znode. If the session ends then this lock will delete automatically.

Zookeeper-based Election – If there is a situation where native NameNode is healthy and ZKFC gets to know that none of the other nodes currently holds the znode lock, the ZKFC itself will try to acquire that lock. If it succeeds in this task then it has won the election and becomes responsible for running a failover. The failover is similar to manual failover; first, the earlier active node is fenced if required to do so and then the local node becomes the active node.

Hadoop High Availability Summary

Therefore, in this Hadoop High Availability tutorial, we saw Zookeeper daemons configure to run on three or five nodes. Since Zookeeper does not have a high resource requirement it could be run on the same node as the HDFS Namenode or standby Namenode. Many operators choose to deploy the third Zookeeper process on the same node as the YARN Resource Manager. So, it is advised to keep Zookeeper data separate from HDFS metadata i.e. on different disks as it will give the best performance and isolation.