In this tutorial, we are going to discuss the concept of Data locality in Hadoop. Initially, we will see the introduction to MapReduce Data Locality in Hadoop, then we will discuss the need for Hadoop Data Locality and next with the categories of Data Locality in MapReduce, Data locality optimization. Finally, we will see the advantages of the Hadoop Data Locality principle in this MapReduce tutorial.

What is Data Locality in Hadoop MapReduce?

Data locality in Hadoop is the method of passing the computation close to where the actual data locate instead of moving large data to computation. This reduces overall network congestion. This also increases the overall throughput of the system.

The main disadvantage of Hadoop was cross-switch network traffic due to the enormous amount of data. To overcome this disadvantage, Data Locality came into the picture.

In Hadoop, HDFS stores datasets. Framework splits datasets into blocks and stores across the datanodes. When a client operates the MapReduce job, then NameNode sent the MapReduce code to the datanodes on which data is available according to MapReduce job.

The requirement for Hadoop Data Locality

Hadoop architecture requires to satisfy below conditions to get the benefits of all the advantages of data locality:

• Initially, the Hadoop cluster should have a suitable topology. The Hadoop code should have the capability to read data locality.

• Second, Apache Hadoop should know the topology of the nodes where tasks are executed. Therefore, Hadoop should know where the data is placed.

Categories of Data locality in Hadoop MapReduce

The different categories in Hadoop Data Locality are below:

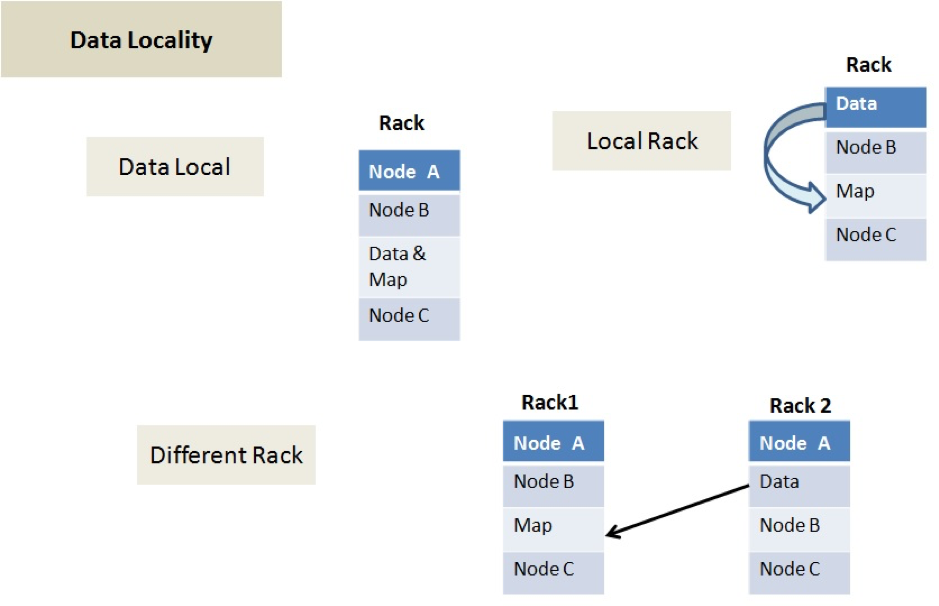

Data local data locality in Hadoop

In this, data is located on the same node as the mapper working on the data. In this, the vicinity of data is very close to computation. Data locality is the most chosen scenario.

Intra-Rack data locality in Hadoop

As we know that it is not always feasible to execute the mapper on the same datanode due to resource restrictions. In this case, it is chosen to run the mapper on the different nodes but the same rack.

Inter–Rack data locality in Hadoop

Sometimes it is also not feasible to execute mapper on a different node in the same rack. In such a situation, we will execute the mapper on the nodes on various racks. Inter –rack data locality is the least chosen scenario.

Hadoop Data locality Optimization

Data locality is the main benefit of Hadoop MapReduce. But this is not always advantageous in practice due to multiple reasons like Heterogeneous cluster, speculative execution, Data distribution and placement, and Data Layout.

In large clusters challenges become more predominant. As in large clusters more the number of data nodes and data, the less is the locality. In huge clusters, some nodes are newer and faster than the other, creating the data to compute ratio out of balance. Therefore, large clusters tend not to be completely homogenous.

In Hadoop, speculative execution since the data might not be local, but it utilizes the computing power. The main reason also lies in the data layout/placement. Moreover, non-local data processing puts a strain on the network, which creates a problem for scalability. Hence the network becomes the bottleneck.

We can also increase data locality by first detecting which jobs have degraded over time or data locality problem. Problem-solving is more complex and involves modifying the data placement and data layout using a different scheduler. After that, we have to validate whether a new execution of the same workload has a good data locality ratio.

Advantages of data locality in Hadoop

• High Throughput – Data locality in Hadoop improves the overall throughput of the system.

• Faster Execution – In data locality, framework passes code to the node where data locates instead of moving enormous data to the node. Therefore, this makes Hadoop faster. Because the size of the program is always smaller than the size of data, so moving data is a bottleneck of network transfer.

Conclusion

In the end, Data locality in Hadoop increases the overall execution of the system and makes Hadoop faster. Therefore, it reduces network congestion.